Pay attention to this crawling issue when developing with Next.js / React

Lately I had this weird crawling issue with my personal websites as well as with our setup at work, they were all Next.js projects. Because I don't have too much Next.js projects under my belt, I was really wondering if it was a Next.js related bug or just some poorly written code from my side. Sadly for me, it was the second one. However, without failure there's no progression, so hooray!

The issue

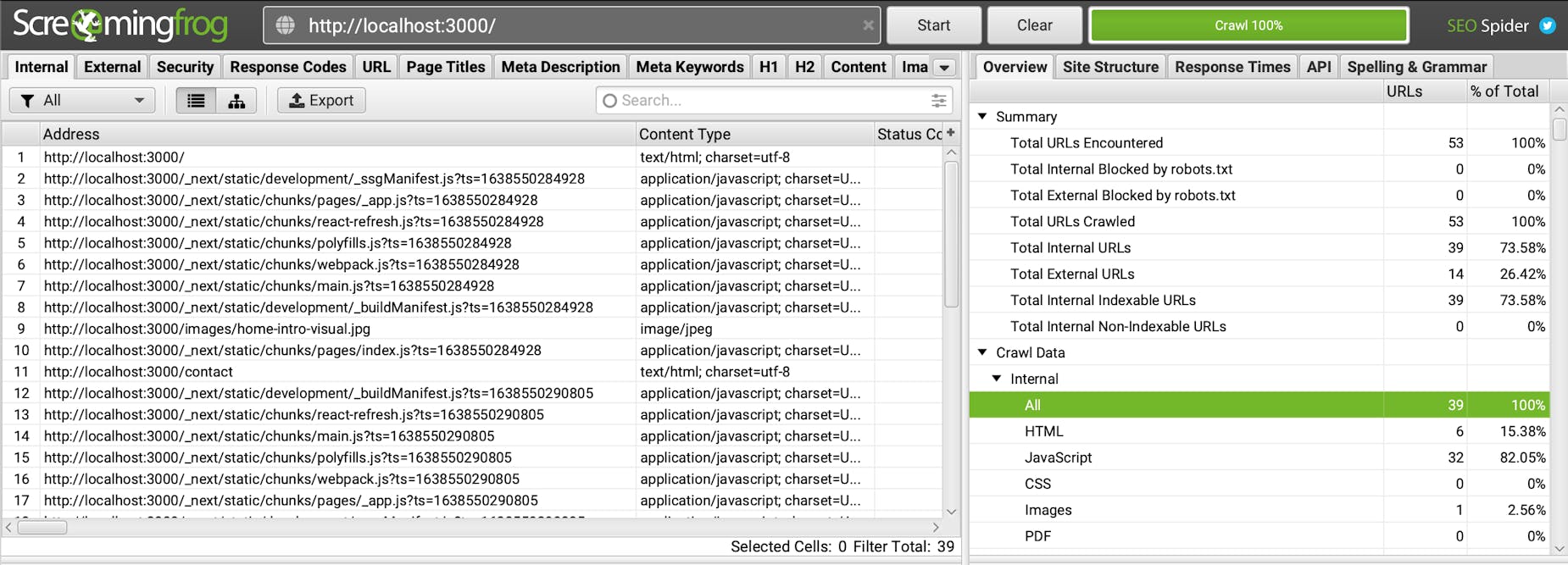

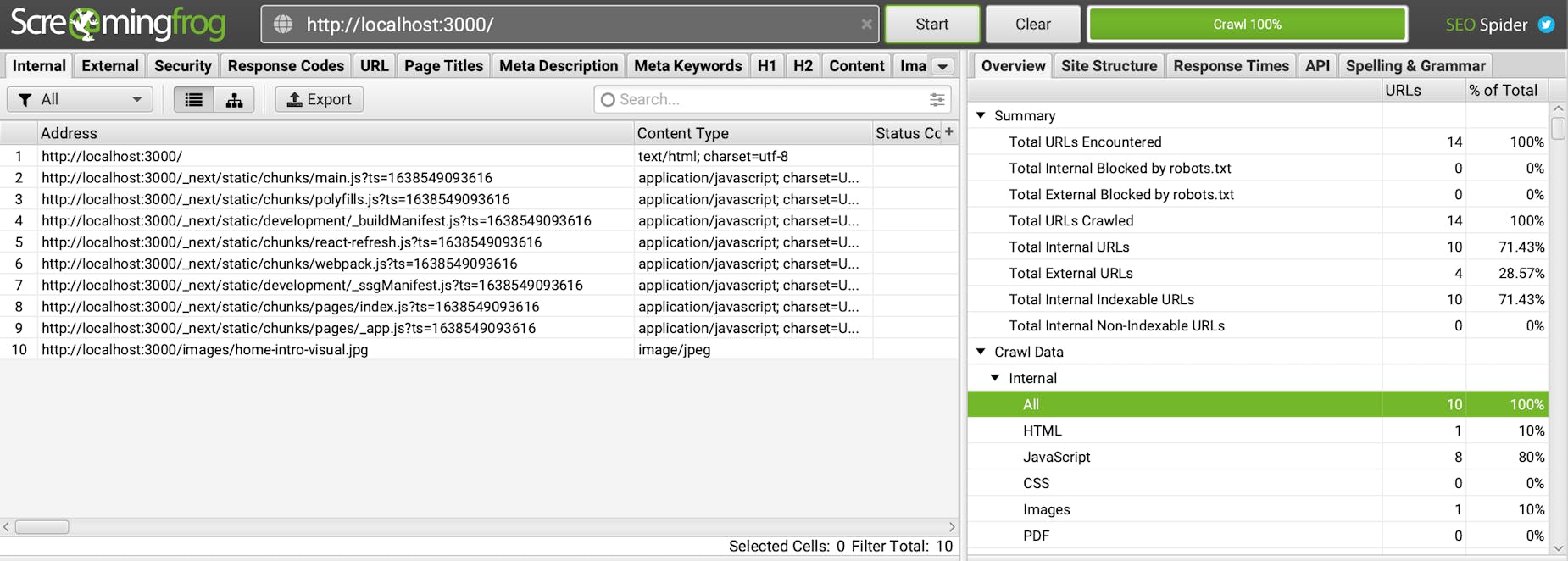

I had an issue in my projects: it couldn't find all links, and therefor it could not scrape the website entirely. You can use several crawling tools to check the SEO of your websites. The one I used was Screaming Frog SEO Spider.

The solution

What actually happened was the following: I had a Screen component where some parts would render based on media queries. For instance, the children of a screen component would only be visible on devices with a width of more than 1300 pixels. Because these media queries weren't fulfilled on the server side, it would always start the application with none of these elements rendered. And because I placed my navigation links in a Screen component, it would never get rendered during the initial render and thus the crawling tool would never find these links.

So if you have important text or links that needs to be found by crawlers, it's best to make sure that they are in your initial source code that's rendered in the browser. This means you should always be careful whenever you are conditionally rendering components, which is also the case when using a loading screen for example. My current workaround: I just use a div for these elements where display is set to none whenever they need to be hidden.

<div

style={{ display: shouldRender ? '' : 'none' }}

>

{children}

</div>

Now that I updated my Screen component, the crawling tool can find all links and content. Hooray indeed!